Letters, texts, assemblages, R programming

The previous blog commented about the ‘what is a …’ ontological problematic raised by digital technologies and digital scholarship uses of these. In particular, what is an archive, what is a collection, and what is seriality and its form of ordering, are all de-stabilised then re-stabilised but in a different (how different?) guise. Additional issues to these are raised regarding using the R programming language to analyse large bodies of text data, some of which are explored below, and which have been core to work for the last eight days.

Typically the researcher working in the Digital Humanities (ie and using computational methods) area is involved in creating, or if a secondary user in re-shaping, the textual corpora for analysis. These are often if not invariably of an extremely large size and composed of assemblages – things from different contexts harnessed together. While the corpora so assembled in a sense remains intact, the kind of parsing and analysis that R is set up up to expedite in fact identifies and works with fragments from this. An example might be along the lines of, writing code in R to search all available digital versions of UK newspapers for the period 1900 to 1939 by using the words ‘civilisation’ or ‘black’ and their cognates, so as to select passages of the 30 words on either side of such word uses and then modelling and analysing the results in a variety of ways.

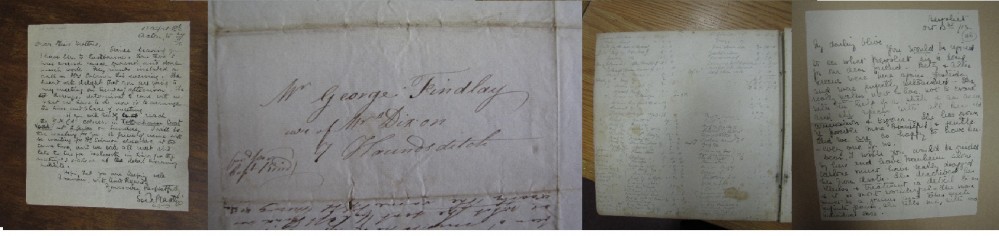

As a methodology in its own right, R is a useful tool when used in an analytically focused investigation, although presently many of the results of its use are ideas short and method long. Put to more thoughtful and theoretically-inspired use, there are already some stunning results that can be placed on the plus side of the scales. In addition, if working with very large amounts of textual data, then individual pieces of text (say a letter written on 27 January 1843 to the LMS headquarters in London by one Southern African missionary) have a distant relationship to the many thousands of other letters written by such missionaries from 1797 to 1930, even more so when letters from those in China, New Guinea, Australia and so on are added into the picture. In such situations – which is the one WWW research is dealing with although involving even more textual data – a close analytical reading of everything is simply not an option. In this context, R is a potentially helpful way of not only re-joining ‘the item’ and ‘the collection’, but also of exploring the text/collection/database/dataset relationship in new ways.

Doing this will require thinking outside the R box, which already has some orthodoxies as to how it can or should be used in the ‘what is it for’ sense. However, this would apply to whatever programming language or software application is pressed into service. At least with R the programmer is the researcher, which will enable some very specific project analyses to be put into operation. Well, that’s the hope and the wish. We’ll see how it pans out over the next year – at next year’s summer institute of the Digital Humanties seminar hosted by NHC North Carolina, all will be revealed!

And a footnote. This short reflection was started in North Carolina in Chapel Hill, added to at Raleigh airport, continued while in the air, and then finished and dispatched from Philadelphia just before leaving for Manchester, UK. A well travelled blog!

Last updated: 14 June 2015